Introduction to ModelZ

ModelZ is a developer-first platform for prototyping and deploying machine learning models. With our dashboard and APIs, developers can easily bring their ML ideas to life without worrying about the hassle of infrastructure setup or maintenance.

Why use ModelZ

ModelZ provides the following features out-of-the-box:

- Serverless: Serverless architecture enables us to easily scale up or down according to your needs, allowing us to provide a reliable and scalable solution for deploying and prototyping machine learning applications at any scale.

- Reduce cost: Pay only for the resources you consume, without any additional charges for idle servers or cold starts. Get 30 free minutes of L4 GPU usage when you join us. Attach a payment method and get an extra 90 minutes free.

- OpenAI compatible API: Our platform supports OpenAI compatible API, which means you can easily integrate new open source LLMs into your existing applications with just a few lines of code.

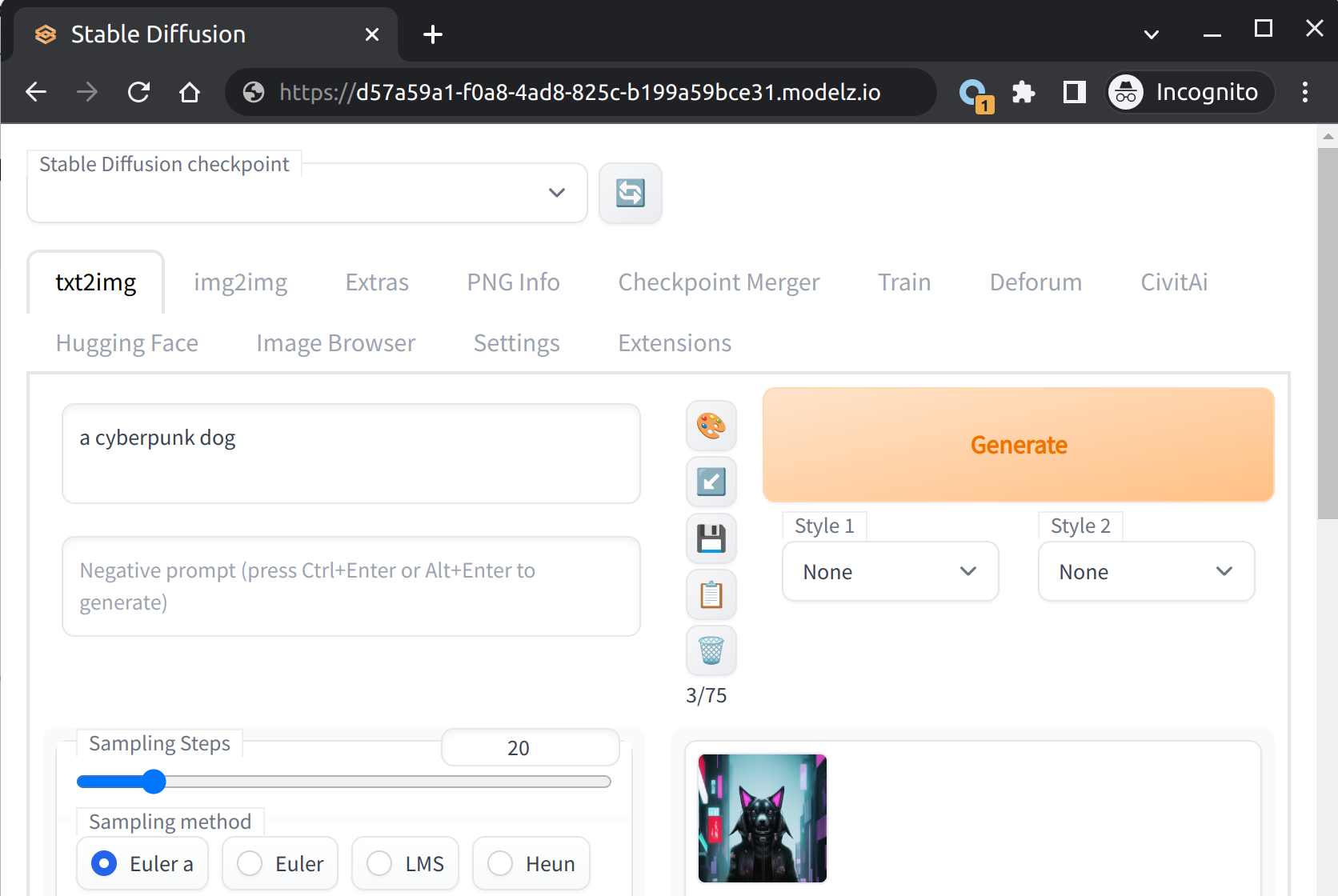

- Prototyping environment: We provide a robust prototyping environment with support for Gradio and Streamlit. With our integration with HuggingFace Space, accessing pre-trained models and launching demos is easier than ever, with just one click. This allows you to quickly test and iterate on your models, saving you time and effort in the development process.

Deploy your first model in 3 minutes

Take the following walkthrough to get started:

-

Sign up (opens in a new tab): Head to our website and sign up for a free account. Once you've signed up, you'll be able to access our platform and start deploying and prototyping machine learning models. You will get 1 free GPU hour on us.

-

Deploy your first model on ModelZ. You could use our templates. ModelZ's serverless architecture means you don't need to worry about managing or scaling infrastructure - we take care of that for you.

Or you could launch demos from Huggingface Space in one click. Get the URL of the demo and paste it in the ModelZ platform.

-

Once your model is ready, feel free to start using it! If you deployed a model with Gradio, you could access it by the URL

https://<deployment-id>.modelz.ioprovided in the deployment page.

If you are using Mosec, you could use the SDK and API key to access the model.

# pip install modelz-py import modelz APIKey = "mzi-abcdefg..." cli = modelz.ModelzClient(key=APIKey, deployment="abcdgefg...", timeout=10) cli.inference(params={})Or alternatively, you could use the

curl.curl --location 'https://{DEPLOYMENT_ID}.modelz.io/inference' \ --header 'Content-Type: application/json' \ --header 'X-API-Key: mzi-abcdefg...' \ --data '{ ... }'

Getting started

To get started with ModelZ, you could check out the following resources: